[ad_1]

Getty Photos

Microsoft introduced DirectStorage to Home windows PCs this week. The API guarantees quicker load instances and extra detailed graphics by letting recreation builders make apps that load graphical information from the SSD on to the GPU. Now, Nvidia and IBM have created the same SSD/GPU know-how, however they’re aiming it on the huge information units in information facilities.

As an alternative of concentrating on console or PC gaming like DirectStorage, Massive accelerator Reminiscence (BaM) is supposed to offer information facilities fast entry to huge quantities of knowledge in GPU-intensive functions, like machine-learning coaching, analytics, and high-performance computing, in line with a analysis paper noticed by The Register this week. Entitled “BaM: A Case for Enabling High quality-grain Excessive Throughput GPU-Orchestrated Entry to Storage” (PDF), the paper by researchers at Nvidia, IBM, and some US universities proposes a extra environment friendly option to run next-generation functions in information facilities with huge computing energy and reminiscence bandwidth.

BaM additionally differs from DirectStorage in that the creators of the system structure plan to make it open supply.

The paper says that whereas CPU-orchestrated storage information entry is appropriate for “basic” GPU functions, resembling dense neural community coaching with “predefined, common, dense” information entry patterns, it causes an excessive amount of “CPU-GPU synchronization overhead and/or I/O visitors amplification.” That makes it much less appropriate for next-gen functions that make use of graph and information analytics, recommender methods, graph neural networks, and different “fine-grain data-dependent entry patterns,” the authors write.

Like DirectStorage, BaM works alongside an NVMe SSD. In line with the paper, BaM “mitigates I/O visitors amplification by enabling the GPU threads to learn or write small quantities of knowledge on-demand, as decided by the pc.”

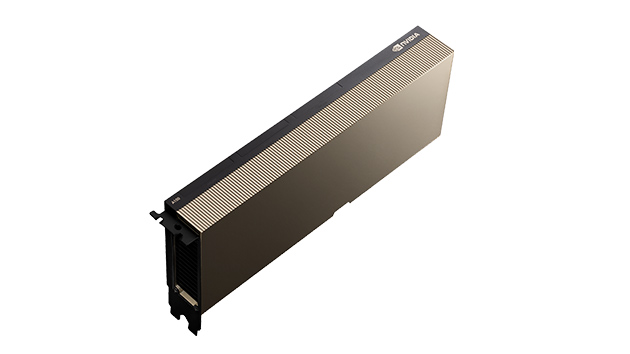

Extra particularly, BaM makes use of a GPU’s onboard reminiscence, which is software-managed cache, plus a GPU thread software program library. The threads obtain information from the SSD and transfer it with the assistance of a customized Linux kernel driver. Researchers performed testing on a prototype system with an Nvidia A100 40GB PCIe GPU, two AMD EPYC 7702 CPUs with 64 cores every, and 1TB of DDR4-3200 reminiscence. The system runs Ubuntu 20.04 LTS.

Researchers’ prototype system included an Nvidia A100 40GB PCIe GPU (pictured).

The authors famous that even a “consumer-grade” SSD may help BaM with app efficiency that’s “aggressive towards a way more costly DRAM-only resolution.”

[ad_2]

Source link